Introduction To A/B Testing

John Wanamaker famously said “Half the money I spend on advertising is wasted; the trouble is I don’t know which half.” And that is the trouble with most marketing. Marketing leaders don’t know what’s working or not working until they gather enough data. Sometimes the data tells an unexpected story.

A/B split testing done properly takes the guesswork out of growth. This guide explores the principles of A/B testing from definition to implementation. Let’s get into it.

How do you define A/B Testing?

A/B testing, also known as split testing, is a method of comparing two versions of a webpage, email, app, or any other marketing asset to determine which one performs better. By randomly splitting your audience into two groups, you can present each group with a different version (A and B) and measure which one yields the desired outcome more effectively.

A/B testing helps you make improvements to your marketing. When your marketing assets perform better, everyone wins. Your customers get the information they need to make better purchase decisions. You enjoy revenue growth.

Why is A/B Testing Essential?

Data-Driven Decision Making

A/B testing allows you to make decisions based on actual data rather than intuition or guesswork. This leads to more effective strategies and improvements.

Optimization

By continuously testing and iterating, you can optimize various elements of your marketing campaigns or user experience, leading to better performance and higher conversion rates.

Minimizing Risk

Testing changes on a small scale before full implementation helps minimize the risk of making large-scale changes that might negatively impact performance.

How A/B Testing Works

Control and Variation

Every A/B test has two versions which are referred to as the Control and the Variation.

- Control: This is the original version of the asset you are testing. It serves as the baseline to compare against.

- Variation: This is the modified version of the asset. It contains a single or multiple changes that you hypothesize will improve performance.

If you are presenting two assets to your audience for the first time, you may consider both versions as variations. The winner will become the control for your next test.

Randomization

The audience is randomly divided into two groups to ensure that each version is tested under similar conditions. This randomness helps eliminate biases and ensures that the results are attributable to the changes made.

Key Performance Indicators (KPIs)

A key performance indicator (KPI) is chosen to evaluate the performance of each version.

Common KPIs include click-through rates (CTR), conversion rates, bounce rates, and time on page.

E-commerce businesses frequently test KPIs like Add-To-Cart Rate, Checkout Rate, and Cart Abandonment Rate. Lead generation focused businesses frequently test KPIs like Lead Form Conversion, Button Clicks, and more.

By tracking these metrics, you can determine which version performs better.

Statistical Significance

How do you know that an A/B test “worked”? Each version must be tested long enough to ensure that a noticeable result has occurred after being tested by a significant number of people or users.

Too small a sample size might lead to inconclusive or misleading results.

Example scenario:

Imagine you have a landing page where visitors can sign up for a newsletter. You want to see if changing the call-to-action (CTA) button color from blue to green increases the sign-up rate.

- Control: The current landing page with a blue CTA button.

- Variation: The same landing page but with a green CTA button.

You set up an A/B test where 50% of your visitors see the blue button (control) and 50% see the green button (variation). After running the test for a sufficient amount of time and gathering enough data, you compare the sign-up rates for both versions. If the green button significantly outperforms the blue button, you can implement the green button for all users.

Types of A/B Split Tests

There are two types of A/B tests: simple A/B tests and multivariate testing. Both simple A/B tests and multivariate tests are essential tools in optimization and experimentation, but they differ significantly in scope, complexity, and application. Here’s a detailed breakdown of the differences between the two:

Simple A/B Tests

Testing one thing at a time. A simple A/B test compares two versions of a single element to determine which version performs better.

Only one element is changed between the control (A) and the variation (B). The test isolates one variable to see its direct impact on the chosen metric.

Examples:

- Changing the color of a call-to-action button.

- Testing different headlines for a landing page.

- Comparing two email subject lines.

Advantages:

- Easy to set up, execute, and analyze.

- Results are straightforward since only one variable is changed.

- Requires fewer resources and a smaller sample size compared to more complex tests.

Disadvantages:

- Only tests one change at a time, which can be slow if you have multiple hypotheses.

- May require multiple rounds of testing to optimize several elements.

Multivariate Testing

Testing multiple changes simultaneously. Multivariate testing examines multiple variables simultaneously to understand the impact of each variable and their interactions on the outcome.

Several elements on a page are changed and tested together. It tests all possible combinations of the variables to see how different combinations perform.

Examples:

- Testing different combinations of headlines, images, and call-to-action buttons on a landing page.

- Examining various layouts and color schemes together.

Advantages:

- Provides a deeper understanding of how multiple elements interact and contribute to performance.

- Can optimize several elements simultaneously, leading to faster results.

Disadvantages:

- More complicated to design, execute, and analyze.

- Requires a larger sample size to achieve statistical significance due to the multiple combinations.

- Managing and interpreting the data can be challenging without robust analytical tools.

Conducting Your A/B Test

Follow these steps to execute a winning A/B test.

1. Plan Your A/B Test

Clear goals help ensure that everyone involved in the A/B test understands what success looks like and can interpret the results accurately. Goals should be quantifiable, allowing you to track progress and determine the effectiveness of your changes.

Choose your key performance indicators (KPIs) and make sure that they align with your specific business goals.

Choose your variables to test that will influence your key performance indicators. Common variables include headlines, call-to-actions, images, and more. Your variables will depend on your goals and what type of asset you are testing.

2. Design the Experiment

Careful design of your split testing experiment is essential to ensure that your results are reliable, valid, and actionable.

Creating Test Variations

Develop the control version (A) and one or more test variations (B, C, etc.). Each variation should have a specific change or set of changes based on your hypotheses. Ensure that the changes are meaningful and likely to impact the chosen metrics. Avoid making too many changes in a single variation to keep results interpretable. Each variation should be noticeably different from the control. Minor changes might not produce significant results.

Maintain consistency in elements that are not being tested to avoid confounding variables. For example, if you are testing button color, keep the text, size, and placement consistent across variations. Also, make sure that all variations provide a good user experience. Avoid variations that might frustrate users or negatively impact their perception of your brand.

Importance of Randomization

Randomization ensures that the test and control groups are similar in all respects except for the variable being tested. This helps eliminate biases that could skew the results.

Randomly assigning users to different groups enhances the reliability of the results, making it easier to attribute any differences in outcomes to the changes being tested.

Ensuring Statistical Significance

This is the most misunderstood aspect of A/B testing. Pay attention to statistical significance. It is important that you run your A/B test long enough to draw a reasonable conclusion. How do you know if your variations should be tested by 100 people, 1,000 people, or 1 million people?

A sufficient sample size ensures that the test results are reliable and statistically significant. Too small a sample size might lead to inconclusive or misleading results.

Here are some important considerations for calculating statistical significance:

- Baseline Metric: The current KPI metric of your control group. For example, if you are testing for improved lead form conversion rates on a landing page, then your baseline metric would be the current conversion rate on the control version of the landing page.

- Effect Size: The minimum detectable difference between the control and variation that you consider significant. In other words, do you need your variation to perform 1% better or 10% better to consider it significantly different from your control? This is a measure of how “meaningful” the result should be.

- Confidence Level: This is a measure of how “accurate” the result should be. This refers to your confidence level that the result of your A/B test is true. The confidence level increases as the size of your test audience increases.

For example, if you ask 10 people which version they like, and 7 people say “version B”, you may be tempted to feel confident that version B is the winner. But what if we tested another 100 people? How confident are you that 70 out of 100 would say “version B”? It’s not obvious, and your confidence level should be low after testing only 10 people. However if you tested 1 million people and 700k said “version B”, then you can be extremely confident that version B is the winner.

Confidence level accounts for the randomness of untested audiences. A/B testers typically set confidence level at 95% when planning, indicating that there is only a 5% chance that the results are due to random variation.

You can explore P-Values if you wish to dive even deeper into understanding statistical measurements.

You can use online calculators or statistical formulas that consider the expected effect size, baseline conversion rate, and desired confidence level. Some of our favorite tools include:

VWO A/B Test Duration Calculator (simple)

Optimizely’s Sample Size Calculator (simple)

AB TestGuide (complex)

Running the A/B Test

Now let’s get your A/B test set up and running. Then, it will require careful monitoring and management to ensure accurate and reliable results.

Setting Up the Test on Your Platform:

Choose a reliable A/B testing platform or tool that fits your needs. Popular options include Optimizely, VWO, and Adobe Target. Many modern digital marketing tools and platforms include built-in A/B testing elements such as “experiments” in Google Ads or “a/b test” in Klaviyo.

- Configure the test within the chosen tool. This involves specifying the control and variations, defining the audience segments, and setting the duration of the test.

- If the test involves changes to website elements, ensure the code for the variations is correctly implemented. This might involve editing HTML, CSS, JavaScript, or other elements depending on what is being tested.

Track Performance in Real-Time:

Use the A/B testing tool’s dashboard to track key metrics in real-time. Set up alerts to notify you of significant changes or issues during the test. This allows for a quick response if something goes wrong.

Schedule regular check-ins to review the test’s progress and ensure it is running smoothly. Look for any unexpected drops or spikes in performance metrics.

If issues arise, such as technical errors, incorrect implementation, or unexpected user behavior, troubleshoot and address them promptly. Document any changes made to maintain the integrity of the test. It’s better to catch errors early. Nobody wants to ignore an active A/B test for two weeks only to find out that it was set up improperly.

Avoid Biases and Ensure Consistency:

Be aware that external factors, such as marketing campaigns, seasonal trends, or changes in user behavior could affect the test disproportionately. Run the test under consistent conditions as much as possible.

Maintain a random assignment of users to control and test groups to avoid selection bias. The groups should be similar in composition and behavior. In other words, if you are testing chocolate vs vanilla, don’t test it on 100 people that you know already order vanilla ice cream.

Analyzing Test Results

Analyzing test results is a critical phase in the split testing process. It involves collecting data, performing statistical analysis, and making informed decisions based on the outcomes.

Gather Data from Your Test:

After the test is complete, gather data from your A/B testing platform. Carefully review the collected data and statistical analyses. Look for trends, patterns, and any anomalies that might affect the interpretation.

Ensure that the confidence intervals support the conclusion that the observed differences are statistically significant. You can plug your data into any of the tools listed above in the section on statistical significance. If you want to use the simplest calculator possible just to determine certainty of your data, try this one.

Determining the Winning Variation:

Compare the key metrics of the control and variation groups. Identify which version performed better in terms of the defined goals and objectives.

Confirm that the performance difference is statistically significant. Only consider a variation as the winner if it shows a significant improvement over the control. (95% confidence is the standard.)

Handling Inconclusive Results:

Sometimes, the results may not show a clear winner. This can happen if the changes made were too subtle, the sample size was too small, or external factors influenced the test.

If results are inconclusive, consider re-testing with a larger sample size or a more extended testing period. Or reevaluate your hypotheses and test different variables or changes that might have a more substantial impact. You may wish to perform a deeper analysis by segmenting the audience to identify if specific user groups responded differently to the test variations.

Implementing Changes

No split test is complete without celebrating the winning variant. Make sure that you implement the changes that produce the best result. Deploy the winning variation to your entire audience. Ensure that the implementation is smooth and does not introduce any new issues.

Before full implementation, conduct thorough QA testing to ensure that the winning variation works correctly across all devices, browsers, and platforms. Document the changes made, the reasons for these changes, and the expected outcomes. This provides a reference for future tests and helps in tracking the impact of the changes.

Next, make your winner the “control” version of your next A/B test!

Case Studies & Examples

One of our clients, a Fortune 500 financial business, hired us to lead their go-to-market strategy for a new subscription service they created. We put together a paid media plan as a part of this strategy. Google Ads was a major component of the paid media plan.

After a few months of launching new campaigns and analyzing results, we hypothesized that we could get even more conversions from the same ads simply by improving the landing page.

To test this hypothesis, we created an A/B test:

- The existing landing page represented the control (A). We created a second improved version of the landing page to represent the variant (B).

- Using the Experiments feature of Google Ads, we create a 1-month split test to send equal traffic to the control and the variant. We would evaluate after one month whether we had enough performance data for statistical significance.

- It was determined that our primary KPI, cost per conversion, would be the metric of success. In other words, how many conversions we could achieve for the same ad spend.

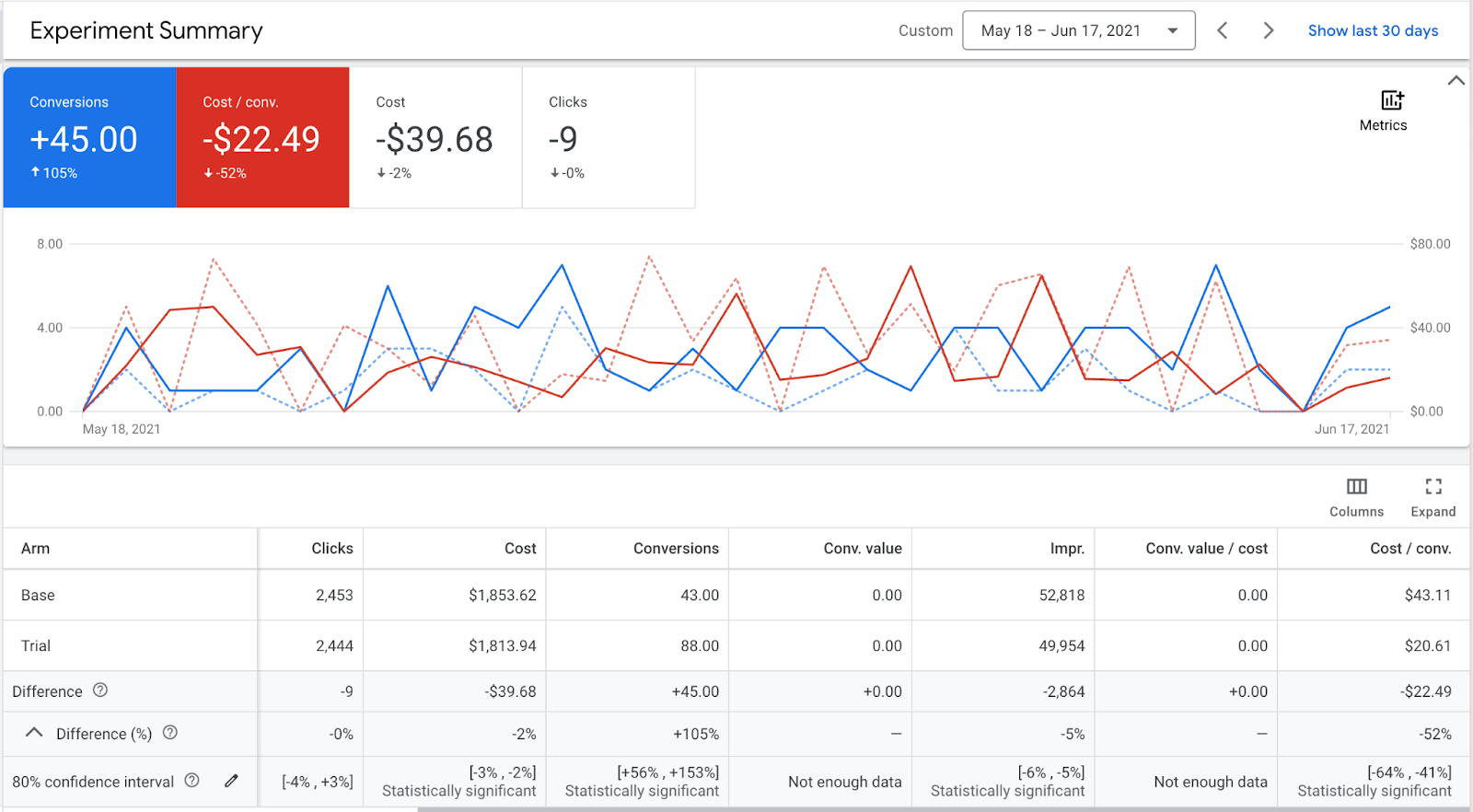

- After one month had passed, we looked back at the results.

- The control (original landing page) produced 43 conversions from $1,853.62 of ad spend, leading to a $43.11 cost per conversion.

- The variant (new landing page) produced 88 conversions from $1,813.94 of ad spend, leading to a $20.61 cost per conversion.

Initially, we were tempted to celebrate this as a victory. But before we could apply the B variant permanently, we wanted to check the statistical significance.

During the setup of this Experiment, we input an 80% confidence interval as a goal. Google does a decent job of displaying the statistical significance of the experiment once it is complete. (See the last row in the image).

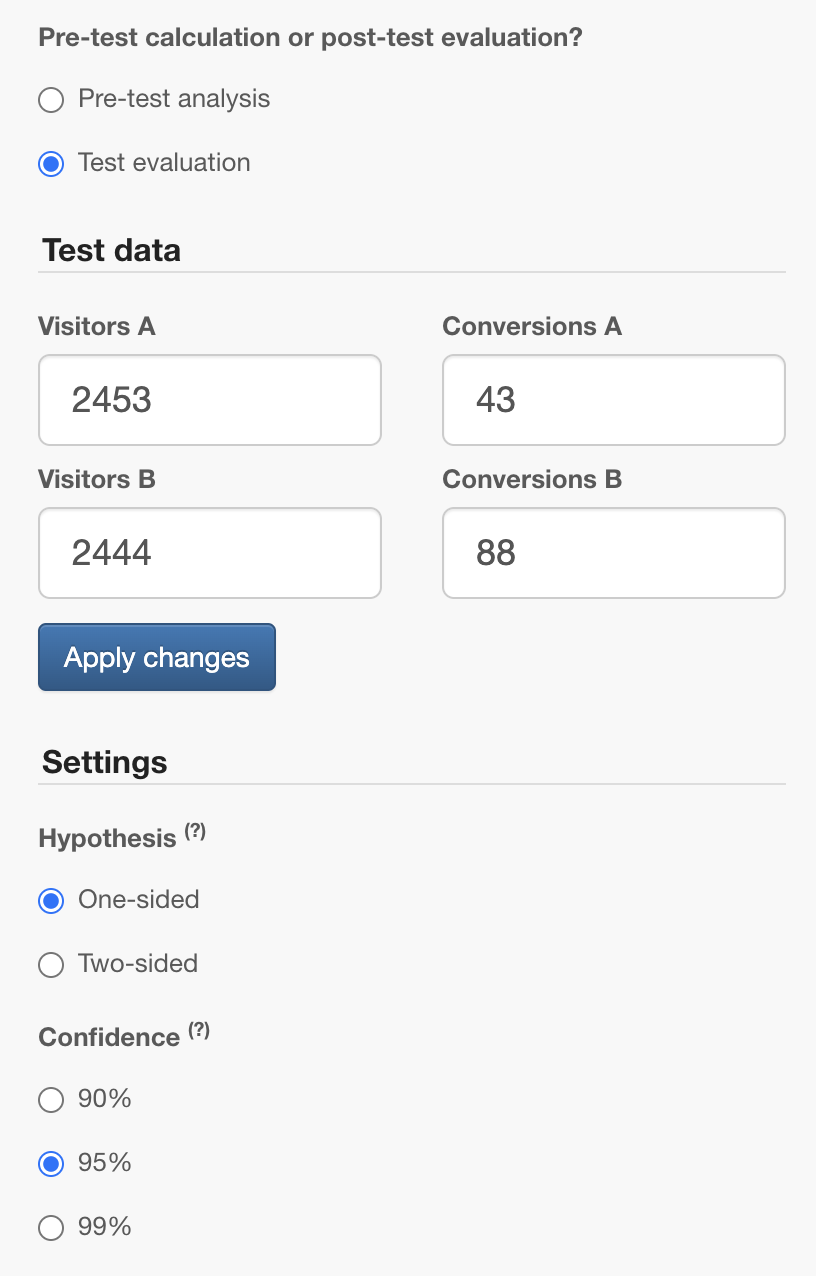

However, we wanted to check how much confidence we could assume beyond the initial 80% goal. Therefore we chose to use the AB Testguide calculator.

We input the result metrics and set a 95% confidence level as the goal.

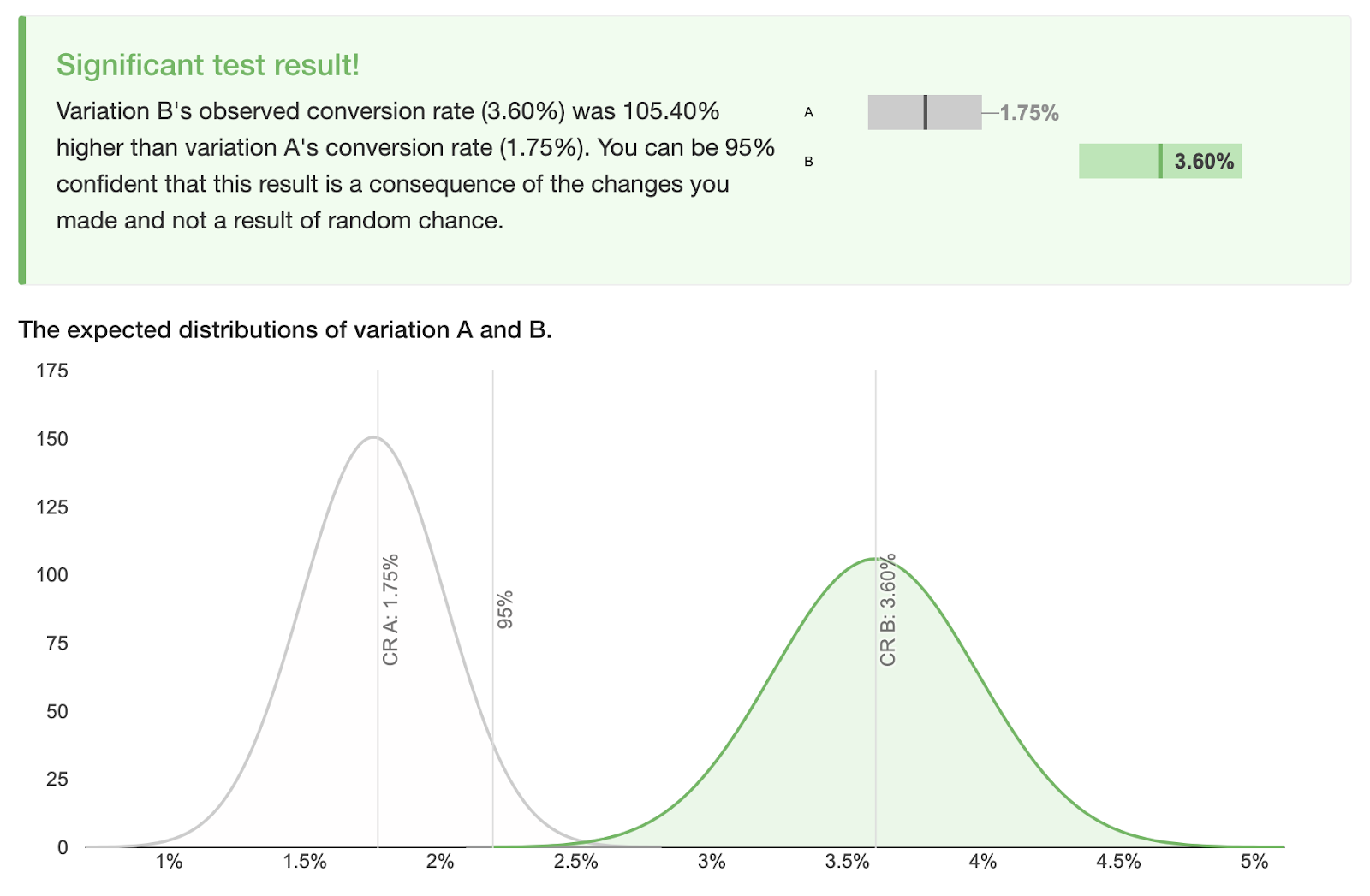

The result? A statistically significant test! The B variation had a 105.4% higher conversion rate than the A variation.

Conclusion

The last step was to implement the changes. We applied the winning landing page variant as the permanent destination for this campaign, thereby enjoying double the conversions for the same ad spend for the foreseeable future.